Apple Live Text vs Google Lens: Best Image Recognition Tool? (2021)

We will compare Live Text vs Google Lens on multiple fronts in this article. We will be discussing language support, offline availability, and other features of both Live Text and Google Lens. As always, use the table of contents below to navigate between the different sections in this article.

What is Live Text in iOS 15?

For anyone who hasn’t watched Apple’s developer conference, let me give you a quick overview of the new Live Text feature in iOS 15. Basically, once you install iOS 15 on your iPhone, your device will be able to identify text within pictures – that too straight from the camera. That way, you can copy-paste stuff from the real world, look up that business you took a picture of, and more. It’s essentially Google Lens but from Apple.

Live Text vs Google Lens: What Can It Do (Basic Features)

To kick things off, let’s take a look at the basic features offered by both Live Text and Google Lens. That way, we can see which out of the two brings more to the table right off the bat.

Apple Live Text

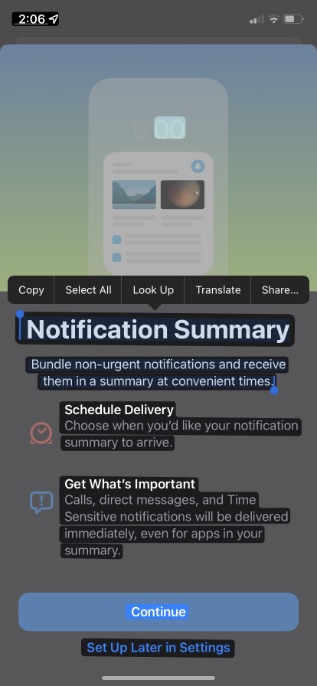

Apple Live Text, as I mentioned above, can identify text, phone numbers, emails, etc., from pictures in your gallery, as well as straight from the camera app. There’s also Visual Lookup, which can identify animals and well-known landmarks, so you can get more information about them by tapping on them via your viewfinder. Once you have identified text in a picture, you can use it in a variety of ways. Obviously, you can copy-paste the text wherever you would like, but there’s more that you can do. If you have identified an email, Live Text will directly give you an option to create a new email to that address. You will get the option to call if the text identified is a phone number, open any identified address in Apple Maps, and more.

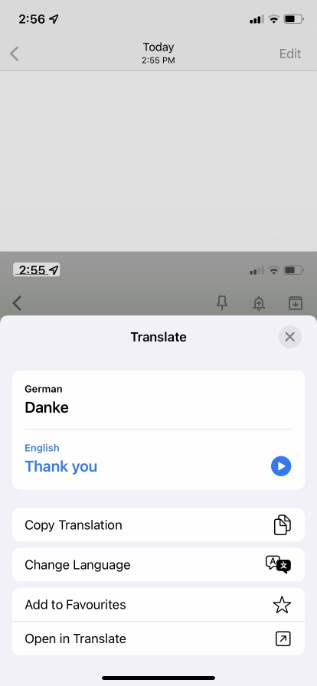

There’s also translation built-in thanks to the Apple Translate app. You can tap on the “Translate” button in the pop-up context menu to translate the identified text into English (or any of the supported languages).

Perhaps most useful for me is the fact that Live Text can understand when there’s a tracking number in a picture, and can let you directly open the tracking link, which is quite impressive.

Google Lens

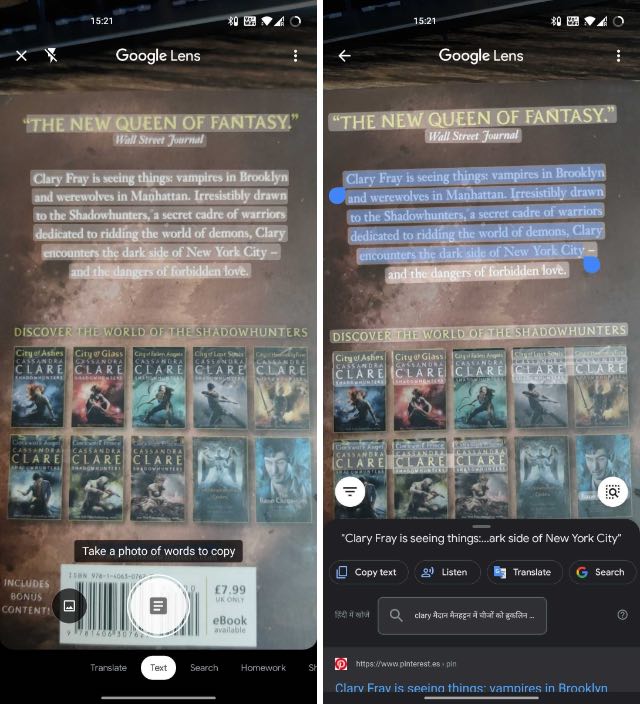

On the other hand, Google Lens can do a lot of neat things as well. Obviously, it can identify text within images or straight from your camera app. You can then copy-paste the highlighted text, or, similar to Live Text, make a phone call, send an email, and more. You can also create a new calendar event straight from Google Lens, which can come in handy.

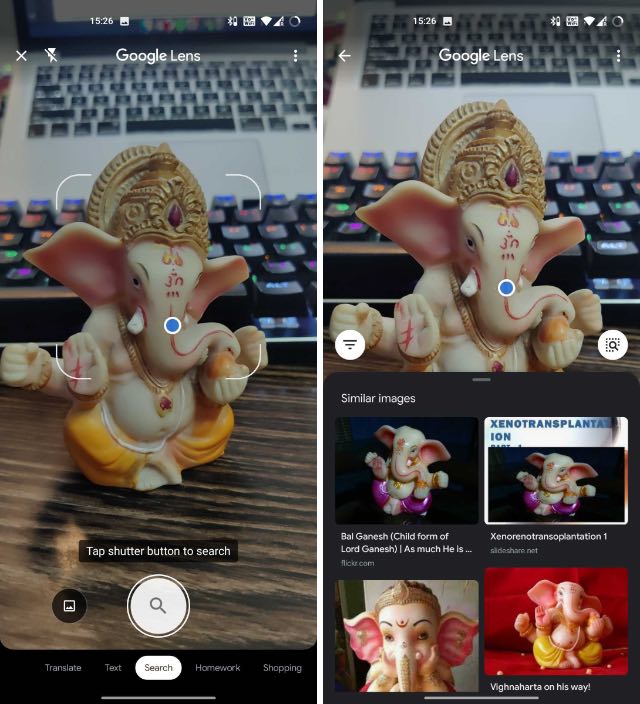

Another neat feature of Google Lens is business card scanning. If someone hands you their business card, you can scan it with Google Lens, and you will get the option to add a new contact with all their details filled in automatically. That’s really cool, and it’s something we often used while attending launch events and networking with people. Now, Live Text’s Visual Lookup feature may be able to identify famous landmarks, books, animals, etc., but Google Lens is on a whole other level when it comes to object recognition. Thanks to Google’s expertise with search, and image search, Google Lens can tap into all that knowledge and identify pretty much any object you see around you. Whether it’s a plant, pen, or a handbag you spotted your favorite celebrity wearing. Just scan the picture with Google Lens, and you will get search results for it. That’s a cool feature of Google Lens that Apple’s Live Text/ Visual Lookup doesn’t have. Moreover, Google Lens can also help you with your homework. That’s another thing that Live Text simply can’t do. You can scan a math problem with Google Lens, and it will give you step-by-step solutions for it. It also works for other subjects, including physics, biology, history, chemistry, and more.

Clearly, Google Lens has way more features on offer than Apple’s Live Text does at this point. Hopefully, Apple will make Live Text more useful and add more features into the mix as the years go by. But as of right now, Google Lens is more feature-rich and offers more capabilities than Live Text.

Live Text vs Google Lens: Integration

Moving on, let’s talk about the integration of these image recognition features into the operating system as a whole. Well, Apple has always had a unique focus on integrated experiences, and that extends to Live Text as well. On your iPhone, running iSO 15, Live Text is baked into the default Photos app, as well as the camera. While that’s also the case for Google Lens on Android phones, the difference is that you have to tap to enable Google Lens in order to start scanning text, objects, or whatever else you are trying to do. Live Text, on the other hand, is pretty much always on. You can just long-press on the text you are trying to copy-paste or the phone number you want to call, and you can get on with it. What’s more, Live Text is also comes baked into iOS 15 itself. So, if you are using a messaging app like WhatsApp, or any other app like Notes, or the email app on iPhone, and want to scan and paste some text from the real world, you can use the “Text from Camera” feature to input the text directly. Check out this feature in action right here:

A similar feature exists with Google Lens as well, but in that case, you will have to first switch over to your camera app, head into Google Lens, scan the text and copy it, and then go back to the original app and paste it there. That’s a lot of extra steps that you don’t need to bother yourself with if you are using Live Text. Apple is usually really good at integrating its features into the devices it sells, and Live Text is no exception. It works wherever you need it to, and makes itself useful in ways that you will want to use it. I can’t say the same for Google Lens in Android, especially not in everyday use. Clearly, Live Text is better in this regard, but I hope Google Lens brings a similar kind of integration soon. Because when good features are copied from other places, it ends up making the products better for us, and I’m all for it.

Live Text vs Google Lens: Accuracy

As far as accuracy is concerned, both Google Lens and Apple Live Text are equally good. I have used Google Lens extensively, and I have been using Live Text on my iPhone for the past couple of days, and I am yet to notice any issues with accuracy on either of these image recognition software. That said, I have noticed that if you are scanning text with Live Text, it sometimes mistakes “O” (the letter) for a “0” (the digit) and vice-versa. That can be a little annoying and not something I want to give a pass to, but considering this is still a beta release, I’m not going to consider it a big issue. On the other hand, when it comes to object recognition, Google Lens has the complete upper hand. It can not only identify more objects and animals than Visual Lookup on an iPhone, but it’s also accurate with the results. Visual Lookup has completely failed at recognizing any objects for me, and I even fed it a picture of the Golden Gate Bridge, which is the exact example Apple used in its WWDC 2021 keynote. But that didn’t work either, so clearly, the feature needs a lot of work.

Overall, Google Lens has better accuracy than Apple’s Live Text feature in iOS 15. It’s, however, a close competition as far as text, emails, phone numbers, etc., are concerned.

Live Text vs Google Lens: Language Support

Since both Google Lens and Live Text support translation, it’s important to consider which languages they both work in. That also extends to which languages they can even identify text in, and well, when it comes to these metrics, Live Text is miles behind Google Lens. Here are the languages supported by Live Text for translation:

English Chinese French Italian German Portuguese Spanish

On the other hand, Google Lens supports translation in every language that Google Translate can work with. That is over 103 languages for text translation. In contrast to this number, Apple’s seven language support fades to nothingness.

Live Text vs Google Lens: Device Support

Apple’s new Live Text feature is available on iPhone with iOS 15, iPad with iPadOS 15, and macOS 12 Monterey. Google Lens is available to use on all devices with Android 6.0 Marshmallow and above. Plus, it is integrated into Google Photos and the Google app, meaning you can use it on iOS devices as well. It’s no surprise that Google Lens is available on more devices than Apple Live Text. However, if you use multiple devices and want a single solution for image recognition, Google Lens has a higher chance of being available across different ecosystems.

Google Lens vs Live Text: Which One Should You Use?

Now that we have gone through all the different comparison points for both Live Text and Google Lens, the question remains, which one should you use? That answer will vary from person to person. As someone who is deep into the Apple ecosystem, I have used Live Text more in the last week than I have ever used Google Lens. However, Google Lens offers unique capabilities that Live Text and Visual Lookup don’t. It all depends on your use cases and your workflow. I don’t often find myself wondering where I can buy a pair of jeans that Chris Hemsworth was wearing. But I do find myself wanting to add text to my WhatsApp conversation without copy-pasting it off my Mac, and Live Text lets me do that way more easily than Google Lens. So, what do you think about Google Lens vs Live Text? Which one do you think is better and why? Let us know in the comments.